Documenting Data

Pipelines:

Best Practices for Reliable, Scalable Data Systems

By Dataoma Team • November 20, 2025 • 5

min read

Documenting data pipelines is essential for any team working with modern data platforms. Whether you

use Airflow, dbt, Snowflake, Kafka, or event-driven architectures, the success of your pipelines

depends not only on clean engineering, but also on clear, accessible, and up-to-date documentation.

Good documentation accelerates onboarding, reduces debugging time, improves collaboration, and boosts

trust in the data.

In this guide, you’ll learn how to document data pipelines effectively, from high-level architecture

to operational runbooks.

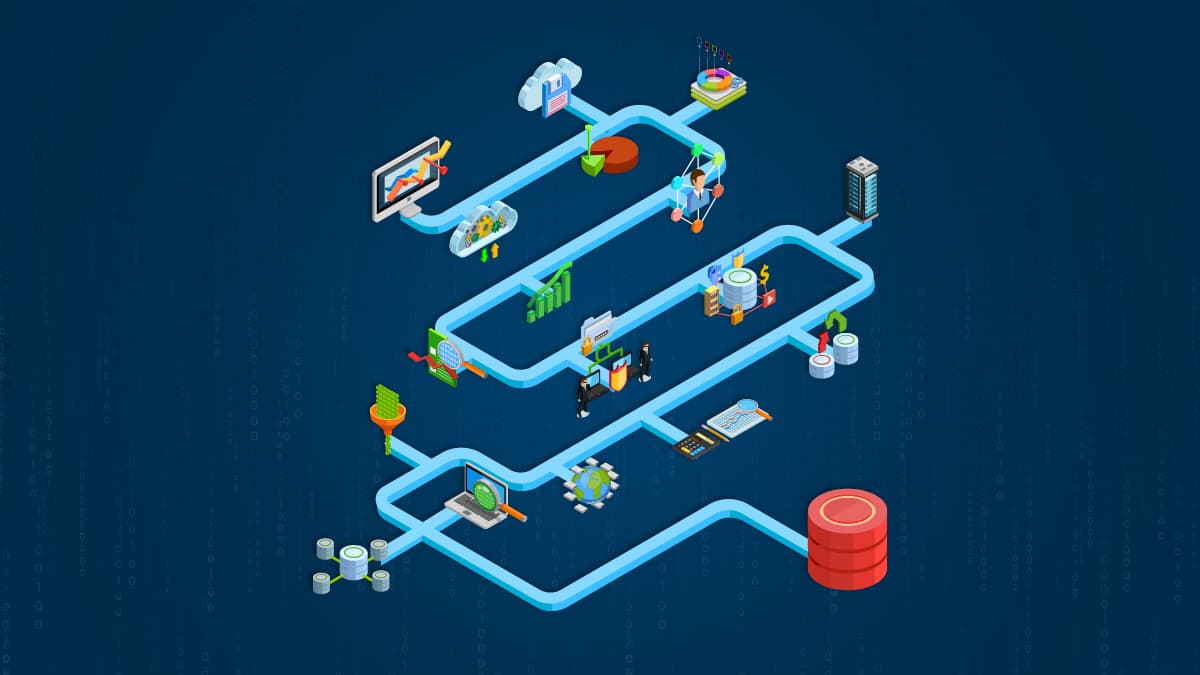

1. Create Clear High-Level Architecture Documentation

A strong documentation foundation begins with a holistic view of your data ecosystem. New team

members should understand how data flows through the system within minutes, not hours.

What to include in architectural documentation

Data Collected – Describe data sources, refresh frequency, ownership, and the

business problem addressed.

Technology Stack – List ingestion, transformation, orchestration, storage, and

consumption tools (e.g., Airflow, Dagster, dbt, Kafka, Snowflake).

System Flow Diagrams – Visualize the path: Source → Transformations → Storage →

Consumption

Why high-level documentation matters

Reduces onboarding time

Helps engineers understand dependencies

Provides a shared language across teams

Simplifies future redesigns or migrations

2. Streamline Your Pipeline Tooling

A minimal, well-chosen tech stack is easier to document and maintain. Reducing the number of tools

results in fewer integration issues, fewer edge cases, fewer systems to maintain, and fewer

documents to update.

Recommended streamlined flow: Source → Transformation → Storage → Consumption

Standardization reduces cognitive load and boosts team productivity.

3. Tests Are Documentation and Documentation Is Tests

Tests communicate how your pipeline behaves far more clearly than long text explanations. They show

exactly what enters a pipeline and what comes out.

Best practices

• Unit tests for deterministic, small transformation logic

• Integration tests covering end-to-end pipeline behavior

• Versioned sample input/output data (e.g., tests/fixtures/)

• Readable, representative fixtures mirroring real-world cases

Tests act as living documentation, ensuring pipelines stay robust as they evolve.

💡 Special tip: Tools like Dataoma can auto-detect business rules

and turn them into testable assumptions (e.g., no NULLs, Age > 18, identifiers are unique), keeping

documentation aligned with actual data behavior.

4. Build Strong Operational Documentation

Operational documentation explains how to run, debug, and monitor your data pipelines. It is critical

for incident response and on-call rotations.

What to document

How to manually run pipelines

Common failures and error patterns

Incident runbooks

Known limitations or edge cases

Links to monitoring dashboards and alert rules

If an issue happens once and might happen again document it.

5. Automate Documentation Whenever Possible

Automated documentation stays fresh and reduces manual workload.

Warehouse-generated schema references

dbt documentation for lineage, tests, and model details

Auto-updated data catalog or discovery platforms like Dataoma

Automation ensures consistency and reduces maintenance overhead.

6. Document When It Matters

Documentation is valuable, but documenting too early can waste time, especially on pipelines that will

soon change.

When not to document heavily:

• The pipeline is experimental

• Data is collected for exploration or validation only

• The business case may evolve or disappear

• You’re rapidly prototyping for stakeholders

Focus documentation effort where ROI is highest: stable, production-critical pipelines.

💡 Special tip: For fast insights without heavy documentation, tools like

Dataoma Summaries can automatically surface important metadata and patterns.

Final Thoughts

The best data engineering teams treat documentation as a core part of their workflow. When done well,

documentation:

• improves reliability

• strengthens trust

• accelerates onboarding

• reduces debugging time

• supports scalable data operations

By applying these best practices; clear architecture, strong testing, streamlined tooling, operational

readiness, and strategic automation, you’ll build a data ecosystem that is maintainable, trustworthy,

and future-proof.

Your future team (and your future self) will thank you.

Try Dataoma free →